|

|

Today I have released a significant new version of my mail server benchmark Postal! The list of changes is below:

- Added new program bhm to listen on port 25 and send mail to /dev/null. This allows testing mail relay systems.

- Fixed a minor bug in reporting when compiled without SSL.

- Made postal write the date header in correct RFC2822 format.

- Removed the name-expansion feature, it confused many people and is not needed now that desktop machines typically have 1G of RAM. Now postal and rabid can have the same user-list file.

- Moved postal-list into the bin directory.

- Changed the thread stack size to 32K (used to be the default of 10M) to save virtual memory size (not that this makes much difference to anything other than the maximum number of threads on i386).

- Added a minimum message size option to Postal (now you can use fixed sizes).

- Added a Postal option to specify a list of sender addresses separately to the list of recipient addresses.

- Removed some unnecessary error messages.

- Handle EINTR to allow ^Z and “bg” from the command line. I probably don’t handle all cases, but now that I agree that failure to handle ^Z is an error I expect bug reports.

- Made the test programs display output on the minute, previously they displayed once per minute (EG 11:10:35) while now it will be 11:10:00. This also means that the first minute reported will have something less than 60 seconds of data – this does not matter as a mail server takes longer than that to get up to speed.

- Added support for GNUTLS and made the Debian package build with it. Note that BHM doesn’t yet work correctly with TLS.

- Made the programs exit cleanly.

Thanks to Inumbers for sponsoring the development of Postal.

I presented a paper on mail server performance at OSDC 2006 that was based on the now-released version of Postal.

I’ve been replying to a number of email messages in my Postal backlog, some dating back to 2001. Some of the people had changed email address during that time so I’ll answer their questions in my blog instead.

/usr/include/openssl/kssl.h:72:18: krb5.h: No such file or directory

In file included from /usr/include/openssl/ssl.h:179

One problem reported by a couple of people is having the above error when compiling on an older Red Hat release (RHL or RHEL3). Running ./configure –disable-ssl should work-around that problem (at the cost

of losing SSL support). As RHEL3 is still in support I plan to fix this bug eventually.

There was a question about how to get detailed information on what Postal does. All three programs support the options -z and -Z to log details of what they do. This isn’t convenient if you only want a small portion of the data but can be used to obtain any information you desire.

One user reported that options such as -p5 were not accepted. Apparently their system had a broken implementation of the getopt(3) library call used to parse command-line parameters.

It appears that some people don’t understand what right-wing means in terms of politics, apart from using it as a general term of abuse.

I recommend visiting the site http://www.politicalcompass.org/ to see your own political beliefs (as determined by a short questionnaire) graphed against some famous people. The unique aspect of the Political Compass is that they separate economic and authoritarian values. Stalinism is listed as extreme authoritarian-left and Thatcherism as medium authoritarian-right. Nelson Mandela and the Dalai Lama are listed as liberal-left.

I score -6.5 on the economic left/right index and -6.46 on the social libertarian/authoritarian index, this means that I am fairly strongly liberal-left. Previously the Political Compass site would graph resulta against famous people but they have since removed the combined graph feature and the scale from the separate graphs. Thus I can’t determine whether their analysis of the politics of Nelson Mandela and the Dalai Lama indicate that one of those men has beliefs that more closely match mine than the other. I guess that this is because the famous politicians did not take part in the survey and an analysis of their published material was used to assess their beliefs, this would lead to less accuracy.

The Wikipedia page on Right-Wing Politics provides some useful background information. Apparently before the French revolution in the Estates General the nobility sat on the right of the president’s chair. The tradition of politically conservative representatives sitting on the right of the chamber started there, I believe that such seating order is still used in France while in the rest of the world the terms left and right are used independently of seating order.

Right-wing political views need not be associated with intolerance. If other Debian developers decide to publish their political score as determined by the Political Compass quiz then I’m sure that we’ll find that most political beliefs are represented, and I’m sure that most people will discover that someone who they like has political ideas that differ significantly from their own.

This morning at LCA Andrew Tanenbaum gave a talk about Minix 3 and his work on creating reliable software.

He cited examples of consumer electronics devices such as TVs that supposedly don’t crash. However in the past I have power-cycled TVs after they didn’t behave as desired (not sure if it was a software crash – but that seems like a reasonable possibility) and I have had a DVD player crash when dealing with damaged disks.

It seems to me that there are two reasons that TV and DVD failures aren’t regarded as a serious problem. One is that there is hardly any state in such devices, and most of that is not often changed (long-term state such as frequencies used for station tuning is almost never written and therefore unlikely to be lost on a crash). The other is that the reboot time is reasonably short (generally less than two seconds). So when (not if) a TV or DVD player crashes the result is a service interruption of two seconds plus the time taken to get to the power point and no loss of important data. If this sort of thing happens less than once a month then it’s likely that it won’t register as a failure with someone who is used to rebooting their PC once a day!

Another example that was cited was cars. I have been wondering whether there are any crash situations for a car electronic system that could result in the engine stalling. Maybe sometimes when I try to start my car and it stalls it’s really doing a warm-boot of the engine control system.

Later in his talk Andrew produced the results of killing some Minix system processes which show minimal interruption to service (killing an Ethernet device driver every two seconds decreased network performance by about 10%). He also described how some service state is stored so that it can be used if the service is restarted after a crash. Although he didn’t explicitely mention it in his talk it seems that he has followed the minimal data loss plus fast recovery features that we are used to seeing in TVs and DVD players.

The design of Minix also has some good features for security. When a process issues a read request it will grant the filesystem driver access to the memory region that contains the read buffer – and nothing else. It seems likely that many types of kernel security bug that would compromise systems such as Linux would not be a serious problem on the HURD. Compromising a driver for a filesystem that is mounted nosuid and nodev would not allow any direct attacks on applications.

Every delegate of LCA was given a CD with Minix 3, I’ll have to install it on one of my machines and play with it. I may put a public access Minux machine online at some time if there is interest.

Firstly for smooth running of the presentations it would be ideal if laptops were provided for displaying all presentations (obviously this wouldn’t work for live software demos but it would work well for the slide-show

presentations). Such laptops need to be tested with the presentation files that will be used for the talks (or pre-release versions that are produced in the same formats). It’s a common problem that the laptops owned by the speakers will have problems connecting to the projectors used at the conference which can waste time and give a low quality display. Another common problem is that laptops owned by the conference often have different versions of the software used for the slides which renders them differently, the classic example of this is OpenOffice 1.x and 2.x which render presentations differently such that using the wrong one results in some text being off-screen.

The easy solution to this is for the conference organizers to provide laptops that have multiple boot options for different distributions. Any laptop manufactured in the last 8 years will have enough disk space for the

latest release of Debian and the last few Fedora releases. As such machines won’t be on a public network there’s no need to apply security updates and therefore a machine can be used at conferences in successive years, a 400MHz laptop with 384M of RAM is quite adequate for this purpose while also being so small that it will sell cheaply.

A slightly better solution would be to have laptops running Xen. It’s not difficult to set up Xephyr in fullscreen mode to connect to a Xen image, you could have several Xen instances running with NFS file sharing so that the speaker could quickly test out several distributions to determine which one gives the best display of their notes. This would also allow speakers to bring their own Xen images.

This is especially important if you want to run lightning talks, when there is only 5 minutes allocated for a talk you can’t afford to waste 2 minutes in setting up a presentation!

In other news Dean Wilson gave my talk yesterday a positive review.

This afternoon I gave a talk at the Debian mini-conf of LCA on security improvements that are needed in Debian, the notes are online here.

The talk didn’t go quite as well as I had desired, I ended up covering most of the material in about half the allotted time and I could tell that the talk was too technical for many audience members (perhaps 1/4 of the audience lost interest). But the people who were interested asked good questions (and used the remainder of the time). Some of the people who are involved in serious Debian coding were interested (and I’ll file a bug report based on information from one of them after making this post).

I believe that I was quite successful in my main aim of giving Debian developers ideas for improving the security of Debian. My second aim of educating the users about options that are available now (with some inconvenience) and will be available shortly in a more convenient form was partially successful.

The main content of my talk was based on the lightning talk I gave for OSDC, but was more detailed.

After my talk I spoke to Crispin Cowan from Novell about some of these issues. He agrees with me about the need for more capabilities which I take as a very positive sign.

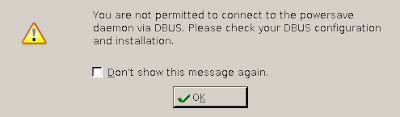

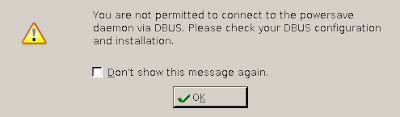

Strange dbus error from the KDE power monitoring tool

We have a list of 10 (famous) girl geeks from CNET and one from someone else.

The CNET list has Ada Byron, Grace Hopper, Mary Shelly, and Marie Curie. Mary Shelly isn’t someone who I’d have listed, but it does seem appropriate now I think about it. Marie Curie is one of the top geeks of all time (killing yourself through science experiments has to score bonus geek points). I hope that there are better alternatives to items 4, 7, 9, and 10 on the Cnet list.

The list from someone else has 9 women I’ve never heard of. If we are going to ignore historical figures (as done in the second list) but want to actually list famous women then the list seems to be short. If we were to make a list of women who are known globally (which would mean excluding women who are locally famous in Australia, or in Debian for example). The only really famous female geek that I can think of is Pamela from Groklaw.

The process of listing the top female geeks might have been started as an attempt to give a positive list of the contributions made by women. Unfortunately it seems to highlight the fact that women are lacking from leadership positions. There seem to be no current women who are in positions comparable to Linus, Alan, RMS, ESR, Andrew Tanenbaum, or Rusty (note that I produced a list of 6 famous male geeks with little thought or effort).

Kirrily has written an interesting article on potential ways of changing this.

A post by Scott James Remnant describes how to hide command-line options from PS output. It’s handy to know that but that post made one significant implication that I strongly disagree with. It said about command-line parameters “perhaps they contain sensitive information“. If the parameters contain sensitive information then merely hiding them after the fact is not what you want to do as it exposes a race condition!

One option is for the process to receive it’s sensitive data via a pipe (either piped from another process or from a named pipe that has restrictive permissions). Another option is to use SE Linux to control which processes may see the command-line options for the program in question.

In any case removing the data shortly after hostile parties have had a chance to see it is not the solution.

Apart from that it’s a great post by Scott.

- echo 1 > /proc/sys/vm/block_dump

The above command sets a sysctl to cause the kernel to log all disk writes. Below is a sample of the output from it. Beware that there is a lot of data.

Jan 10 09:05:53 aeon kernel: kjournald(1048): WRITE block XXX152 on dm-6

Jan 10 09:05:53 aeon kernel: kjournald(1048): WRITE block XXX160 on dm-6

Jan 10 09:05:53 aeon kernel: kjournald(1048): WRITE block XXX168 on dm-6

Jan 10 09:05:54 aeon kernel: kpowersave(5671): READ block XXX384 on dm-7

Jan 10 09:05:54 aeon kernel: kpowersave(5671): READ block XXX400 on dm-7

Jan 10 09:05:54 aeon kernel: kpowersave(5671): READ block XXX408 on dm-7

Jan 10 09:05:54 aeon kernel: bash(5803): dirtied inode XXXXXX1943 (block_dump) on proc

- Prefixing a bash command with ‘ ‘ will prevent a ! operator from running it. For example if you had just entered the command ” ls -al /” then “!l” would not repeat it but would instead match the preceeding command that started with a ‘l’. On SLES-10 a preceeding space also makes the command not appear in

the history while on Debian/etch it does (both run Bash 3.1).

- LD_PRELOAD=/lib/libmemusage.so ls > /dev/null

The above LD_PRELOAD will cause a dump to stderr of data about all memory allocations performed by the program in question. Below is a sample of the output.

Memory usage summary: heap total: 28543, heap peak: 20135, stack peak: 9844

total calls total memory failed calls

malloc| 85 28543 0

realloc| 11 0 0 (in place: 11, dec: 11)

calloc| 0 0 0

free| 21 12107

Histogram for block sizes:

0-15 29 30% ==================================================

16-31 5 5% ========

32-47 10 10% =================

48-63 14 14% ========================

64-79 4 4% ======

80-95 1 1% =

96-111 20 20% ==================================

112-127 2 2% ===

208-223 1 1% =

352-367 4 4% ======

384-399 1 1% =

480-495 1 1% =

1536-1551 1 1% =

4096-4111 1 1% =

4112-4127 1 1% =

12800-12815 1 1% =

Recently there has been some really hot weather in Melbourne that made me search for alternate methods of cooling.

The first and easiest method I discovered is to keep a 2L bottle of water in my car. After it’s been parked in the sun on a hot day I pour the water over the windows. The energy required to evaporate water is 2500 Joules per gram, this means that the 500ml that probably evaporates from my car (I guess that 1.5L is split on the ground) would remove 1.25MJ of energy.from my car – this makes a significant difference to the effectiveness of the air-conditioning (the glass windows being the largest hot mass that can easily conduct heat into the cabin).

It would be good if car designers could incorporate this feature. Every car has a system to spray water on the wind-screen to wash it, if that could be activated without the wipers then it would cool the car significantly. Hatch-back cars have the same on the rear window, and it would not be difficult at the design stage to implement the same for the side windows too.

The next thing I have experimented with is storing some ice in a room that can’t be reached by my home air-conditioning system. Melting ice absorbes 333 Joules per gram. An adult who is not doing any physical activity will produce about 100W of heat, that is 360KJ per hour. Melting a kilo of ice will abrorb 333KJ per hour, if the amount of energy absorbed when the melt-water approaches room temperature is factored in then a kilo of ice comes close to absorbing the heat energy of an adult at rest. Therefore 10Kg of ice stored in your bedroom will prevent you from heating it by your body heat during the course of a night.

In some quick testing I found that 10Kg of ice in three medium sized containers would make a small room up to two degrees cooler than the rest of the house. The ice buckets also have water condense on them. In a future experiement I will measure the amount of condensation and try and estimate the decrease in the humidity. Lower humidity makes a room feel cooler as sweat will evaporate more easily. Ice costs me $3 per 5Kg bag, so for $6 I can make a hot night significantly more bearable. In a typical year there are about 20 unbearably hot nights in Melbourne. So for $120 I can make one room cooler on the worst days of summer

without the annoying noise of an air-conditioner (the choice of not sleeping due to heat or not sleeping due to noise sucks).

The density of dry air at 0C and a pressure of 101.325 kPa is 1.293 g/L.

A small bedroom might have an area of 3M*3M and be 2.5M high giving a volume of 22.5M^3 == 22,500L. 22,500 * 1.293 = 29092.500g of air.

One Joule can raise the temperature of one gram of cool dry air by 1C.

Therefore when a kilo of ice melts it would be able to cool the air in such a room by more than 10 degrees C! The results I observe are much smaller than that, obviously the walls, floor, ceiling, and furnishings in the room also have some thermal energy, and as the insulation is not perfect some heat will get in from other rooms and from outside the house.

If you have something important to do the next day then spending $6 or $12 on ice the night before is probably a good investment. It might even be possible to get your employer to pay for it, I’m sure that paying for ice would provide better benefits in employee productivity than many things that companies spend money on.

|

|